AnySafe: Adapting Latent Safety Filters at Runtime by Parameterizing Safety Constraints in the Latent Space

AppendixAbstract

Overview

AnySafe is a framework for adapting robot safety filters to user-specified constraints at runtime. Traditional latent-space safety filters, derived from Hamilton-Jacobi reachability analysis, assume that the safety constraint is fixed—limiting adaptability when environments or user goals change. AnySafe removes this limitation by conditioning the safety filter on an embedding of an image that represents an undesirable state, allowing the robot to flexibly redefine what “unsafe” means during deployment.

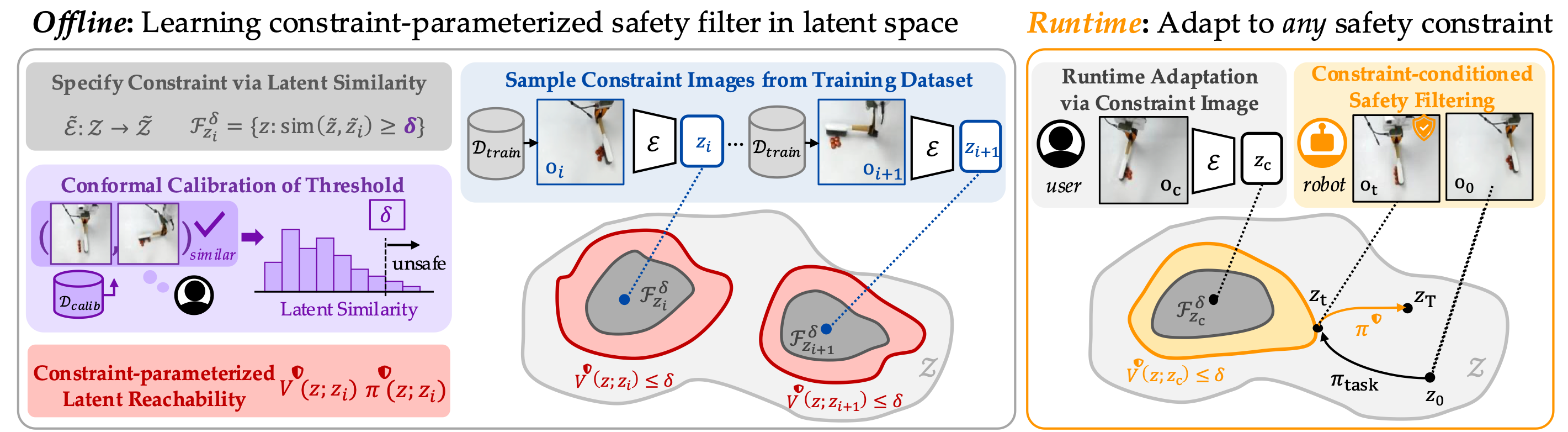

Framework

Left: The constraint-parameterized latent safety filter is trained by sampling constraint images from the WM training dataset, treating any image as a possible test-time safety constraint. Safety constraints are specified using a latent-space similarity measure, with a calibrated threshold that defines the size of the failure set. Right: At runtime, the safety filter adapts to any safety constraint with a user-specified image.

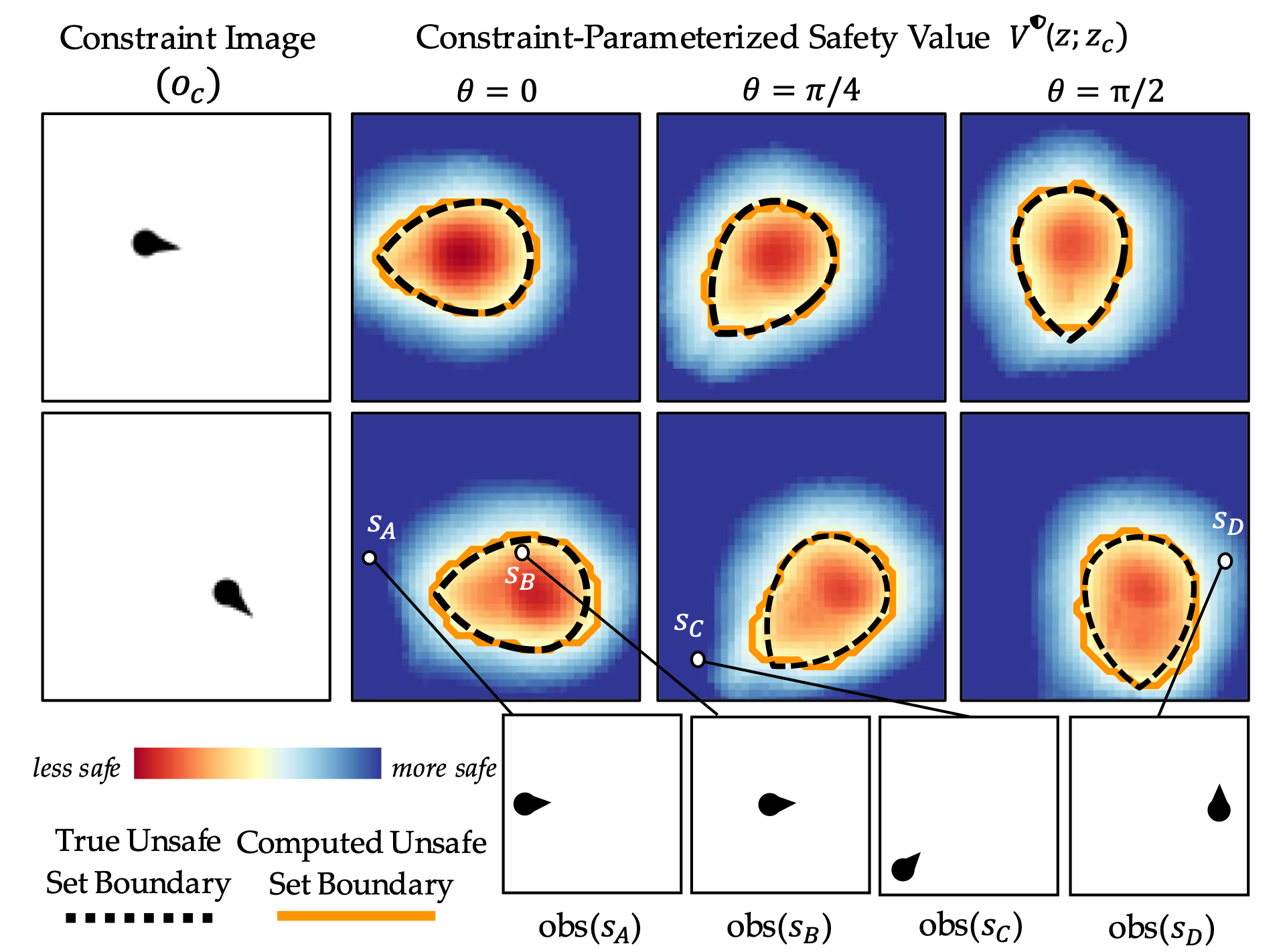

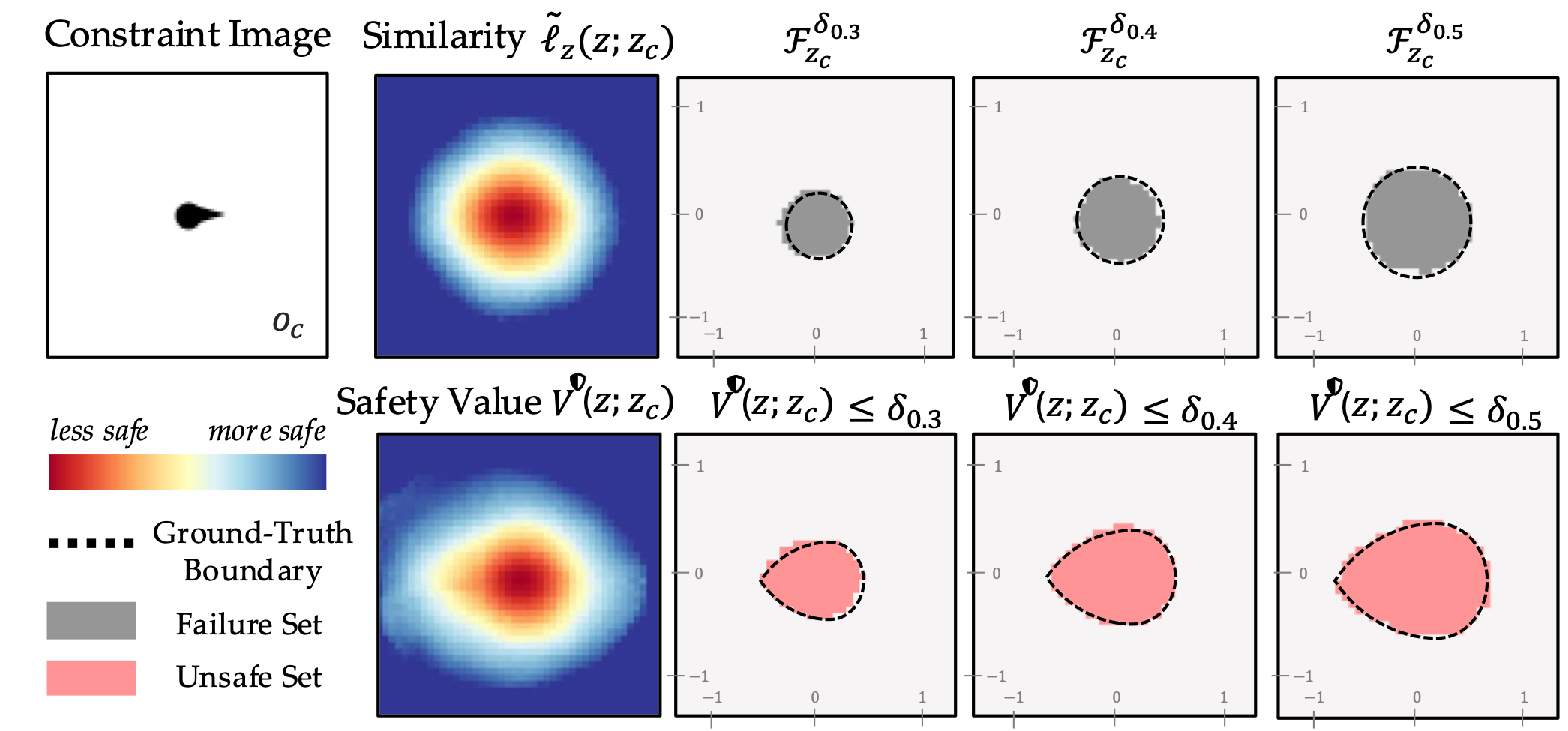

Dubin's Car: Qualitative Results

AnySafe successfully adapts to diverse safety constraints in the Dubins' Car environment by conditioning the safety filter on different constraint representations. When visualized across various headings, the learned value functions accurately reflect the shape and location of the unsafe sets for each specified constraint, demonstrating that the latent safety filter can generalize to new constraint configurations while preserving the accurate unsafe set with respect to a ground truth solver.

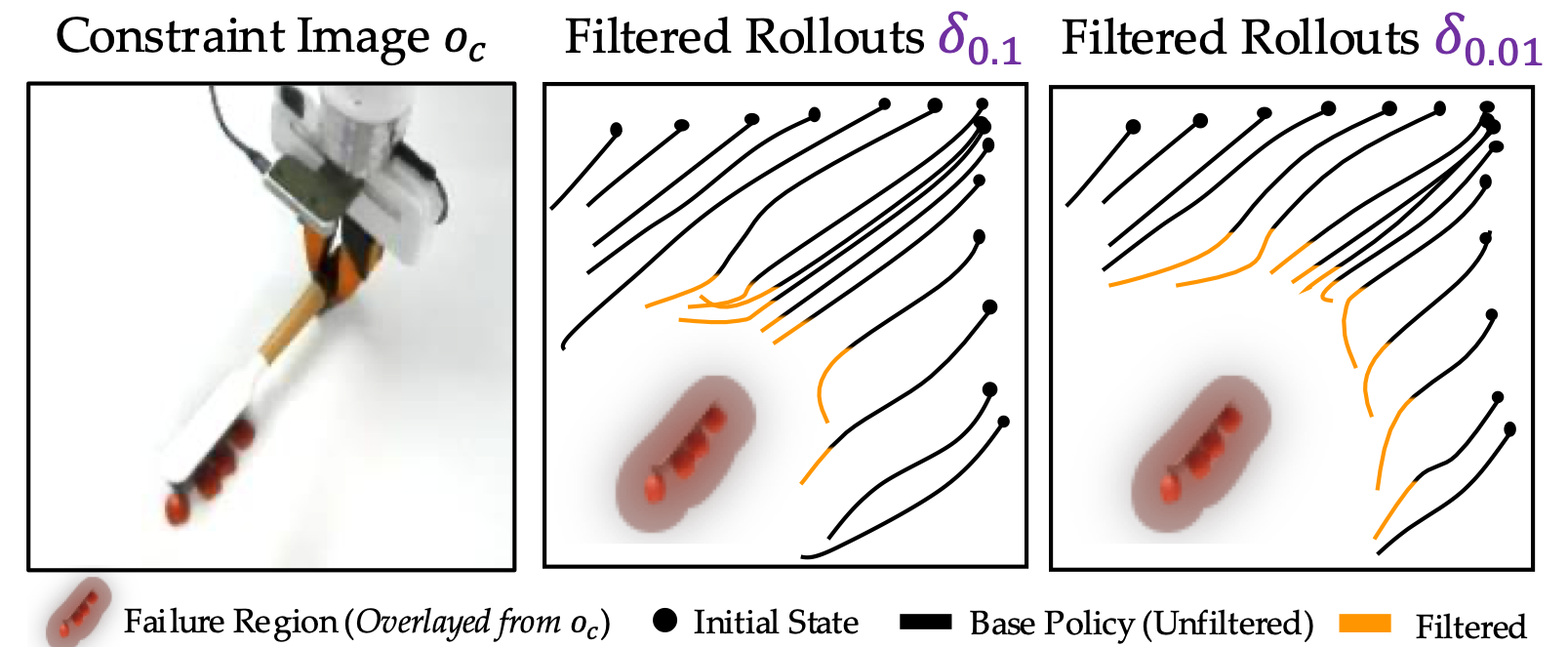

Dubin's Car: Conformal Calibration

By calibrating the latent similarity threshold using different datasets, AnySafe can adjust the effective size of the unsafe set to match the user's definition of “failure.” The results show that conformal calibration provides a principled way to tune the conservativeness of the safety filter—larger thresholds yield looser unsafe regions, while smaller thresholds enforce more cautious behavior—without retraining the model.

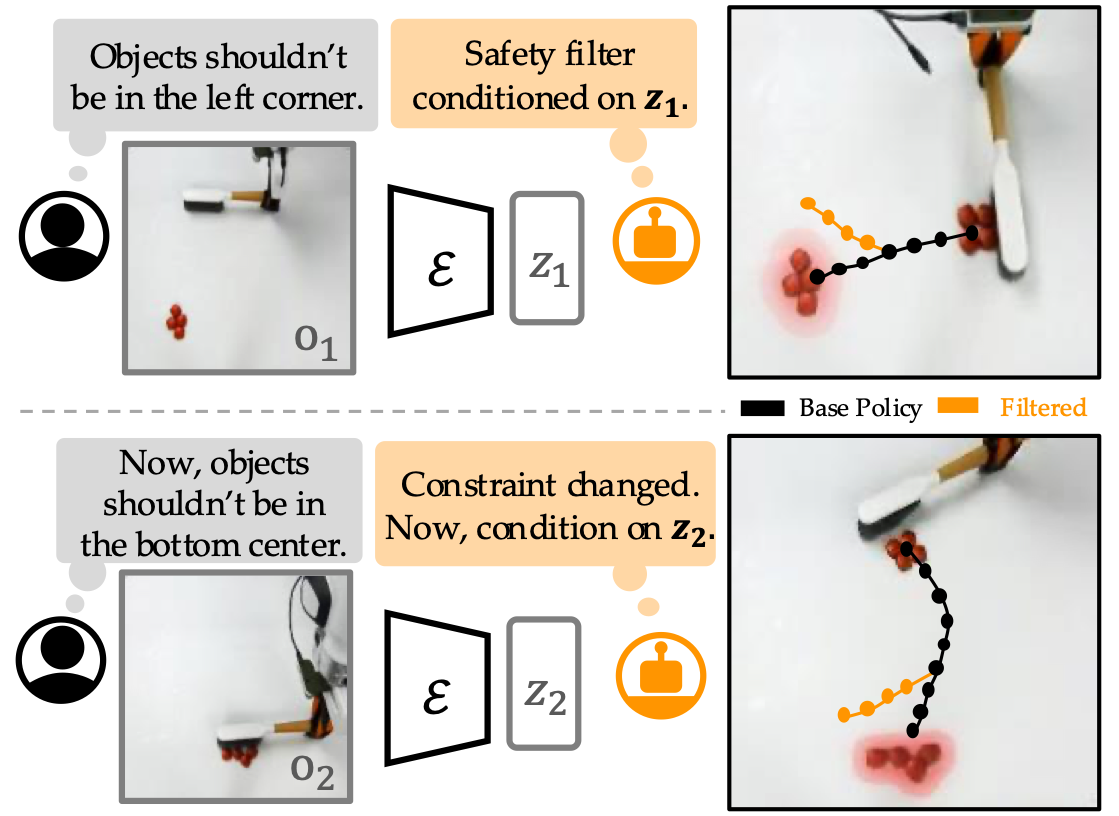

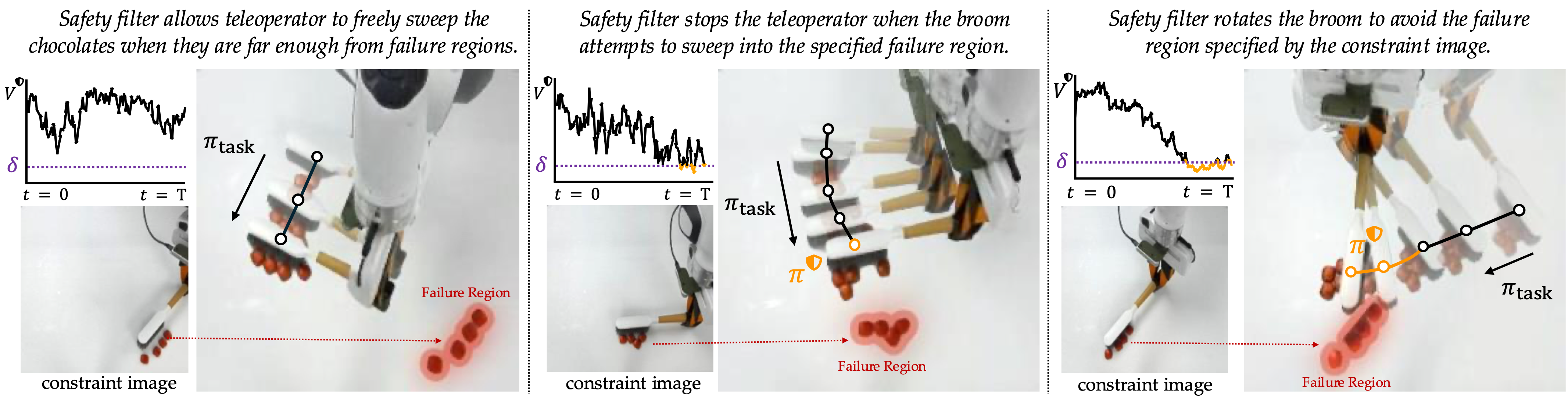

Hardware Experiments (Sweeper): Qualitative Results

On a real Franka manipulator sweeping task, AnySafe adapts its safety behavior in response to different constraint images provided at runtime. The system allows free motion when objects are far from the constraint region but automatically halts or redirects motion as the robot approaches the user-specified unsafe area, demonstrating flexible and intuitive runtime safety adaptation from visual inputs alone.

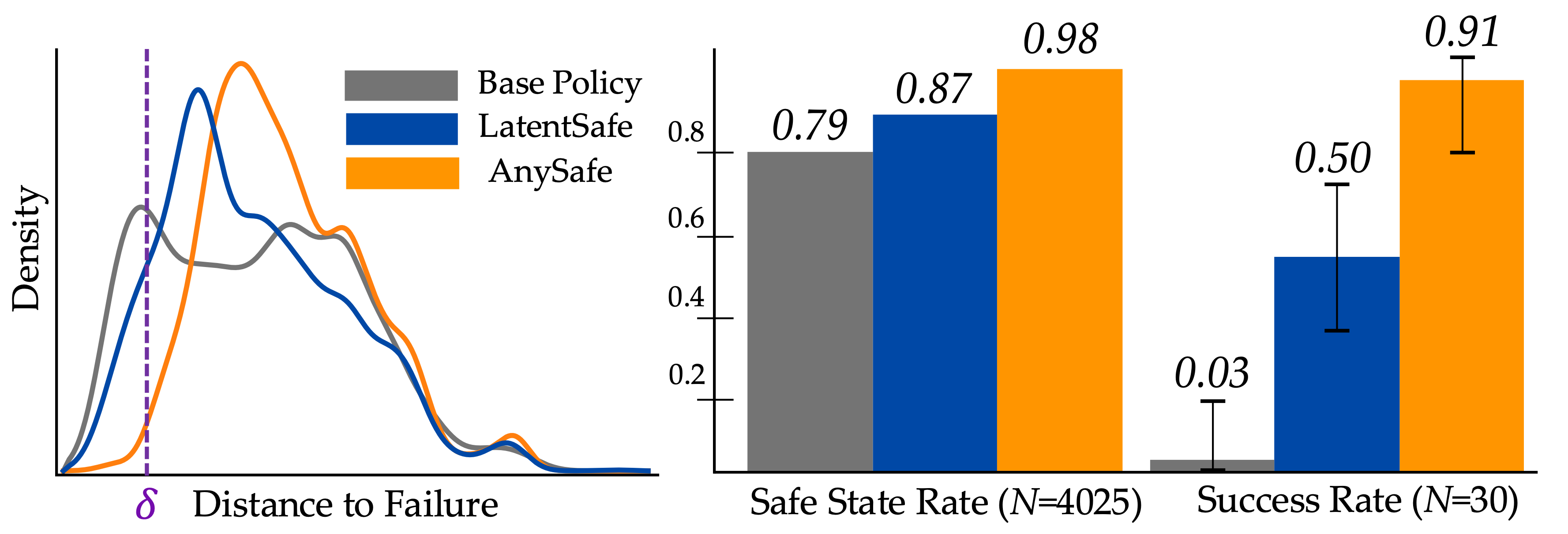

Hardware Experiments (Sweeper): Quantitative Results

Quantitative experiments compare AnySafe to fixed latent safety filters trained for specific constraints (Latent Safe). Across 30 test-time constraint conditions, AnySafe achieves higher success and safe-state rates while maintaining distances from unsafe regions above calibrated thresholds. These results highlight that AnySafe preserves task performance while providing adaptive, constraint-specific safety guarantees at runtime.

Hardware Experiments (Sweeper): Ablation

In this experiment, the Franka manipulator performs a sweeping task where the goal is to avoid knocking objects into specific regions on a tabletop, each defined by a visual constraint image. Three separate fixed latent safety filters (LatentSafe) were trained—each corresponding to one failure region. In contrast, AnySafe was trained as a single constraint-parameterized model that conditions on a user-provided constraint image at runtime. When deployed, AnySafe successfully reproduces the behaviors of all three specialized LatentSafe filters within their respective regions and, crucially, generalizes to a new unseen constraint located between them—something the fixed filters fail to capture. Moreover, we show that using raw DINOv2 features without the learned failure projector results in poorly aligned safety values, emphasizing the importance of learning a failure-relevant latent space for effective constraint generalization.

Hardware Experiments (Sweeper): Conformal Calibration

By varying the conformal calibration confidence level α, AnySafe allows users to control how conservatively the robot intervenes. Smaller α values lead to earlier, more cautious filtering, while larger α values enable less conservative operation. This experiment demonstrates how calibration enables runtime control over the robot's safety margin in a principled and interpretable way.